Natural Language Processing: Its Tasks, Challenges, Tools & Future

What is Natural Language Processing?

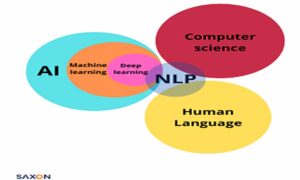

In our today’s computer science, Natural Language Processing (NLP) is a field of artificial intelligence (AI) that focuses on the interaction between advanced computers and human language. It involves the development of algorithms, models, and techniques that enable machines to understand, interpret, and generate human language in a meaningful way.

TechRepublic defines NLP this way: “When you communicate with your computer, tablet, phone, or smart assistant (either through speaking or a chatbox), and your electronic device understands what you’re saying — that’s due to natural language processing”.

What Are The Various Tasks Performed By NLP

NLP aims to bridge the gap between human language and computer language, allowing machines to process and analyze vast amounts of textual data, extract useful information, and perform tasks that require an understanding of human language. These tasks include but are not limited to:

- Sentiment Analysis: Determining the sentiment or emotional tone of a piece of text, such as identifying whether a customer review is positive or negative.

- Named Entity Recognition: Identifying and classifying named entities such as names of people, organizations, locations, and dates within a text.

- Machine Translation: Automatically translating text from one language to another, such as translating English sentences to French.

- Question Answering: Understanding a question in natural language and providing a relevant and accurate answer based on a given text or knowledge base.

- Text Summarization: Generating concise and coherent summaries of long documents or articles.

- Language Generation: Creating human-like text, such as generating responses in chatbots or writing news articles.

NLP techniques leverage various approaches, including statistical models, rule-based systems, and machine learning algorithms. Machine learning, particularly deep learning, has played a significant role in advancing NLP, allowing models to learn from vast amounts of text data and improve their language understanding and generation capabilities.

Challenges Faced By Natural Language Processing

NLP faces several challenges, including ambiguity, context sensitivity, and the intricacies of human language. Language can be nuanced, with variations in meaning, idioms, and cultural references, making it a complex area to navigate. Nonetheless, ongoing research and development in NLP continue to push the boundaries of what machines can achieve in understanding and processing natural language, enabling a wide range of applications in fields like customer support, information retrieval, content analysis, and much more.

History of NLP

The history of Natural Language Processing (NLP) dates back to the 1950s and has evolved significantly over the decades. Here are some key milestones and developments in the history of NLP:

-

Early Foundations (1950s-1960s):

- In the 1950s, Alan Turing proposed the concept of the “Turing Test,” which aimed to determine a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human.

- The Georgetown-IBM experiment in 1954 involved translating Russian sentences into English using a machine, marking one of the earliest attempts at machine translation.

- In the 1960s, the development of early rule-based systems, like the ELIZA program, which simulated conversation using pattern matching techniques, showcased the potential of NLP.

-

Knowledge-Based Systems (1970s-1980s):

- During this period, researchers focused on rule-based approaches, using knowledge bases and grammatical rules to process and understand language.

- Systems like SHRDLU (1970) demonstrated the ability to understand and interact with a restricted domain (block world) through natural language input.

- The development of parsing algorithms, like the Earley parser, improved the ability to analyze and understand the grammatical structure of sentences.

-

Statistical and Machine Learning Approaches (1990s-2000s):

- The 1990s saw a shift towards statistical and machine learning techniques, driven by the availability of large-scale language corpora and computational power.

- Hidden Markov Models (HMM) and statistical language models, such as n-grams, became prominent for tasks like speech recognition and language modeling.

- In 1998, the introduction of the Porter Stemming Algorithm improved the process of reducing words to their base or root form, aiding in text processing.

- The rise of the World Wide Web provided vast amounts of textual data for training and improving NLP models.

-

Deep Learning and Neural Networks (2010s-present):

- The resurgence of neural networks and the advent of deep learning had a profound impact on NLP.

- Word embeddings, such as Word2Vec and GloVe, represented words as dense vector representations, capturing semantic relationships between words.

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks enabled the modeling of sequential and contextual information in language.

- Transformers, introduced by the “Attention Is All You Need” paper in 2017, revolutionized NLP tasks, leading to significant improvements in machine translation and language understanding.

- Pre-trained language models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) achieved state-of-the-art results across various NLP tasks.

The field of NLP continues to evolve rapidly, with ongoing research and advancements focusing on improving language understanding, generation, and the development of more robust and domain-specific models.

How NLP Work?

At a high level, Natural Language Processing (NLP) involves the use of algorithms and techniques to enable computers to understand, interpret, and generate human language.

NLP = NLU + NLG

NLP has two sub-divisions,

Natural Language Understanding (NLU) & Natural Language Generation (NLG).

Here’s a simplified overview of how NLP works:

1.Text Preprocessing:

The first step in NLP is to preprocess the text data. This typically involves tasks like tokenization (splitting text into individual words or tokens), removing punctuation and stopwords (common words with little semantic value like “the” or “and”), and normalizing the text (converting to lowercase, handling contractions, etc.).

2. Language Understanding:

-

- Part-of-Speech (POS) Tagging: NLP models assign grammatical labels (such as noun, verb, adjective) to each word in a sentence, enabling analysis of the syntactic structure.

- Named Entity Recognition (NER): NER identifies and classifies named entities like names of people, organizations, locations, or dates within the text.

- Parsing: Parsing involves analyzing the grammatical structure of a sentence, determining relationships between words, and creating a parse tree or syntactic structure.

3. Semantic Analysis:

-

- Sentiment Analysis: Sentiment analysis aims to determine the sentiment or emotional tone of a piece of text, classifying it as positive, negative, or neutral.

- Entity Sentiment Analysis: This task involves determining the sentiment associated with specific entities or aspects mentioned in the text.

4. Information Extraction:

-

- Text Classification: NLP models can classify text into predefined categories or labels based on its content. This can be used for tasks like spam detection, topic categorization, or sentiment classification.

- Named Entity Recognition: NER can extract specific information from text, such as identifying names of people, organizations, locations, or other relevant entities.

- Relation Extraction: NLP models can identify and extract relationships or associations between entities mentioned in the text, such as identifying the subject and object in a sentence or extracting relations like “works at” or “born in.”

5. Language Generation:

-

- Machine Translation: NLP models can be trained to translate text from one language to another.

- Text Summarization: NLP techniques can generate concise summaries of longer documents or articles.

- Text Generation: NLP models can generate human-like text, such as responses in chatbots or writing news articles.

These steps represent a simplified overview of NLP processes, and the specific techniques and algorithms employed can vary based on the task and complexity of the problem at hand. Modern NLP models, such as those based on deep learning architectures like Transformers, have achieved significant advancements in language understanding and generation, enabling more sophisticated and accurate results across various NLP tasks.

Tools Used In NLP

There are several tools and frameworks used in Natural Language Processing (NLP) to process, analyze, and model human language. Here are some commonly used tools in the field of NLP:

- NLTK (Natural Language Toolkit): NLTK is a popular Python library that provides a wide range of tools and resources for NLP. It offers functionalities for tokenization, stemming, lemmatization, part-of-speech tagging, parsing, and more. NLTK also includes corpora and datasets for training and testing NLP models.

- SpaCy: SpaCy is a Python library for NLP that emphasizes efficiency and production-readiness. It provides various capabilities such as tokenization, part-of-speech tagging, named entity recognition, dependency parsing, and sentence segmentation. SpaCy is known for its speed and ease of use.

- Gensim: Gensim is an open-source Python library for topic modeling and document similarity analysis. It provides algorithms and functionalities for building and training word embeddings, such as Word2Vec and FastText, and performing document similarity tasks using techniques like Latent Semantic Analysis (LSA) and Latent Dirichlet Allocation (LDA).

- Stanford CoreNLP: Stanford CoreNLP is a suite of NLP tools developed by Stanford University. It offers a wide range of capabilities, including part-of-speech tagging, named entity recognition, parsing, sentiment analysis, coreference resolution, and more. CoreNLP provides both a command-line interface and a Java API.

- Transformers: Transformers is a state-of-the-art library for NLP developed by Hugging Face. It provides pre-trained models and architectures, including BERT, GPT, and RoBERTa, for tasks like text classification, named entity recognition, question answering, and machine translation. Transformers also offers easy-to-use APIs and tools for fine-tuning and customizing these models.

- OpenNLP: OpenNLP is a Java library for NLP tasks, developed by Apache. It offers tools and models for various tasks such as tokenization, part-of-speech tagging, sentence segmentation, chunking, named entity recognition, and more. OpenNLP provides both a command-line interface and Java APIs.

- Stanford NLP: Stanford NLP is a collection of NLP tools and resources developed by Stanford University. It includes Java libraries and models for tasks like part-of-speech tagging, named entity recognition, parsing, sentiment analysis, and more. Stanford NLP also provides pre-trained models and online demos for easy experimentation.

These are just a few examples of the many tools and libraries available for NLP. The choice of tool depends on the specific task, programming language preference, ease of use, and the features and functionalities required for the project at hand.

Business And Industrial Applications of NLP

Natural Language Processing (NLP) has a wide range of applications across various industries, offering valuable insights, automation, and improved communication. Here are some notable business and industrial applications of NLP:

- Customer Service and Support: NLP-powered chatbots and virtual assistants can handle customer inquiries, provide instant responses, and offer personalized assistance. They can understand and respond to customer queries, resolve common issues, and escalate complex problems to human agents.

- Sentiment Analysis and Social Media Monitoring: NLP techniques enable sentiment analysis, allowing businesses to gauge public opinion and sentiment towards their products, services, or brands. Social media monitoring tools leverage NLP to analyze and interpret social media posts, reviews, and comments, providing valuable insights for brand reputation management and customer feedback analysis.

- Market Research and Competitive Intelligence: NLP can extract and analyze data from various sources, including surveys, customer reviews, news articles, and forums. This enables businesses to gather market insights, track consumer trends, identify competitor strategies, and make data-driven decisions.

- Content Generation and Curation: NLP models can generate human-like text, aiding in content creation, summarization, and translation. They can automatically generate product descriptions, news articles, or personalized recommendations. NLP can also assist in curating relevant content from large volumes of text, improving content discovery and recommendation systems.

- Fraud Detection and Risk Assessment: NLP can analyze textual data, such as emails, customer interactions, and financial documents, to identify patterns or anomalies indicative of fraud or risk. It can aid in fraud detection, anti-money laundering efforts, and compliance monitoring.

- Voice Assistants and Smart Devices: NLP enables voice-driven interaction with devices and applications. Voice assistants like Amazon’s Alexa, Apple’s Siri, or Google Assistant rely on NLP techniques to understand spoken commands, answer questions, and perform tasks, such as setting reminders, playing music, or controlling smart home devices.

- Healthcare and Medical Applications: NLP can extract information from medical records, clinical notes, and research papers, aiding in clinical decision support, medical coding, and information retrieval. It can facilitate the analysis of patient feedback, detect adverse events, or assist in medical image analysis.

- Human Resources and Talent Management: NLP can automate resume screening, extracting relevant information from resumes, and matching candidates to job requirements. It can assist in employee feedback analysis, sentiment analysis of employee surveys, and identifying employee sentiments and engagement levels.

These are just a few examples of how NLP is utilized in business and industrial applications. The versatility and capabilities of NLP continue to expand as research and development in the field progress, enabling organizations to leverage language processing technologies to gain insights, improve processes, and enhance customer experiences.

The goal of applications in natural language processing, such as dialogue systems, machine translation, and information extraction, is to enable a structured search of unstructured text.

The global NLP market, estimated at $11.1 billion in 2020, is expected to increase to $341.5 billion by 2030, with a CAGR of 40.9% between 2021 and 2030.

What Is The Future Looks Like for NLP

The future of Natural Language Processing (NLP) is promising, with exciting advancements and developments on the horizon. Here are some key areas that indicate the direction NLP is heading:

- Improved Language Understanding: Research and development efforts continue to focus on enhancing language understanding capabilities. Deep learning models, particularly Transformer-based architectures, have significantly improved tasks like text classification, named entity recognition, and sentiment analysis. As models continue to grow larger and more sophisticated, they are expected to achieve even higher levels of accuracy and nuanced understanding.

- Multilingual NLP: NLP is expanding to support a wider range of languages. While English has traditionally received more attention, there is a growing interest in building models and resources for other languages. Efforts are underway to develop pre-trained models and datasets for various languages, enabling better accessibility and inclusivity in NLP applications globally.

- Contextual Understanding: Future NLP models are expected to improve contextual understanding by considering the broader context of a text or conversation. Techniques like coreference resolution, which helps in understanding pronouns and references, and contextual embeddings, which capture contextual information, contribute to more accurate language comprehension.

- Ethical Considerations: As NLP becomes more pervasive, the ethical implications associated with its applications are gaining attention. Concerns related to privacy, bias, fairness, and the responsible use of NLP technology are becoming increasingly important. Efforts are being made to address these challenges and develop ethical guidelines to ensure NLP is used responsibly and in a manner that respects user rights and societal values.

- Domain-Specific NLP: NLP is being customized and tailored for specific domains and industries, such as healthcare, legal, finance, and customer support. This specialization allows for more accurate and relevant results in domain-specific tasks, leveraging domain-specific language models and fine-tuning techniques.

- Conversational AI: Conversational agents, such as chatbots and virtual assistants, are continuously improving their language understanding and generation capabilities. NLP models are being trained on massive conversational datasets to simulate more natural and human-like conversations, enabling more effective and engaging interactions.

- Few-shot and Zero-shot Learning: Advancements in few-shot and zero-shot learning aim to reduce the dependency on large amounts of labeled data for training NLP models. Techniques such as meta-learning, transfer learning, and domain adaptation enable models to generalize and learn from limited labeled examples or transfer knowledge across different domains or languages.

- Interdisciplinary Applications: NLP is increasingly being integrated with other fields such as computer vision, knowledge graphs, and robotics to enable more comprehensive and multimodal understanding. This integration opens up possibilities for advanced applications that can process and comprehend both textual and visual information, leading to more sophisticated AI systems.

These are just some potential directions for the future of NLP. As research continues and technology advances, we can expect NLP to play an even more integral role in our lives, facilitating seamless human-computer interaction and powering a wide range of innovative applications across industries.